Inside OpenAI’s path to accelerating the future of coding

Romain Huet, Head of Developer Experience at OpenAI, on how AI is transforming product development from the inside out

Hey, I’m Timothe, cofounder of Stellar & based in Paris.

I’ve spent the past years helping 500+ startups in Europe build better product orgs and strategies. Now I’m sharing what I’ve learned (and keep learning) in How They Build. For more: My Youtube Channel (🇫🇷) | My Podcast (🇫🇷) | Follow me on Linkedin.

If you’re not a subscriber, here’s what you’ve been missing:

OpenAI was founded in December 2015 by Sam Altman, Greg Brockman and Ilya Sutskever to ensure that artificial general intelligence (AGI) benefits all of humanity. Headquartered in San Francisco, the company’s mission is to develop safe, powerful AI systems and deploy them responsibly.

Growth data:

800m weekly active users

1 million business customers

+7M business seats

+4M developers from over 200+ countries have built on the OpenAI API

$10B ARR pure product revenue in 30 months.

API usage among coding startups has increased more than 300% this year.

Team of 3,000+ employees, spanning across research, engineering, product...

Position & strategy:

OpenAI leads the AI industry in applied research and product integration, balancing cutting-edge model development (GPT, Dall E, Sora, Codex) with accessible consumer and enterprise products. Its strength lies in merging research breakthroughs with production-grade product execution at an unparalleled pace.

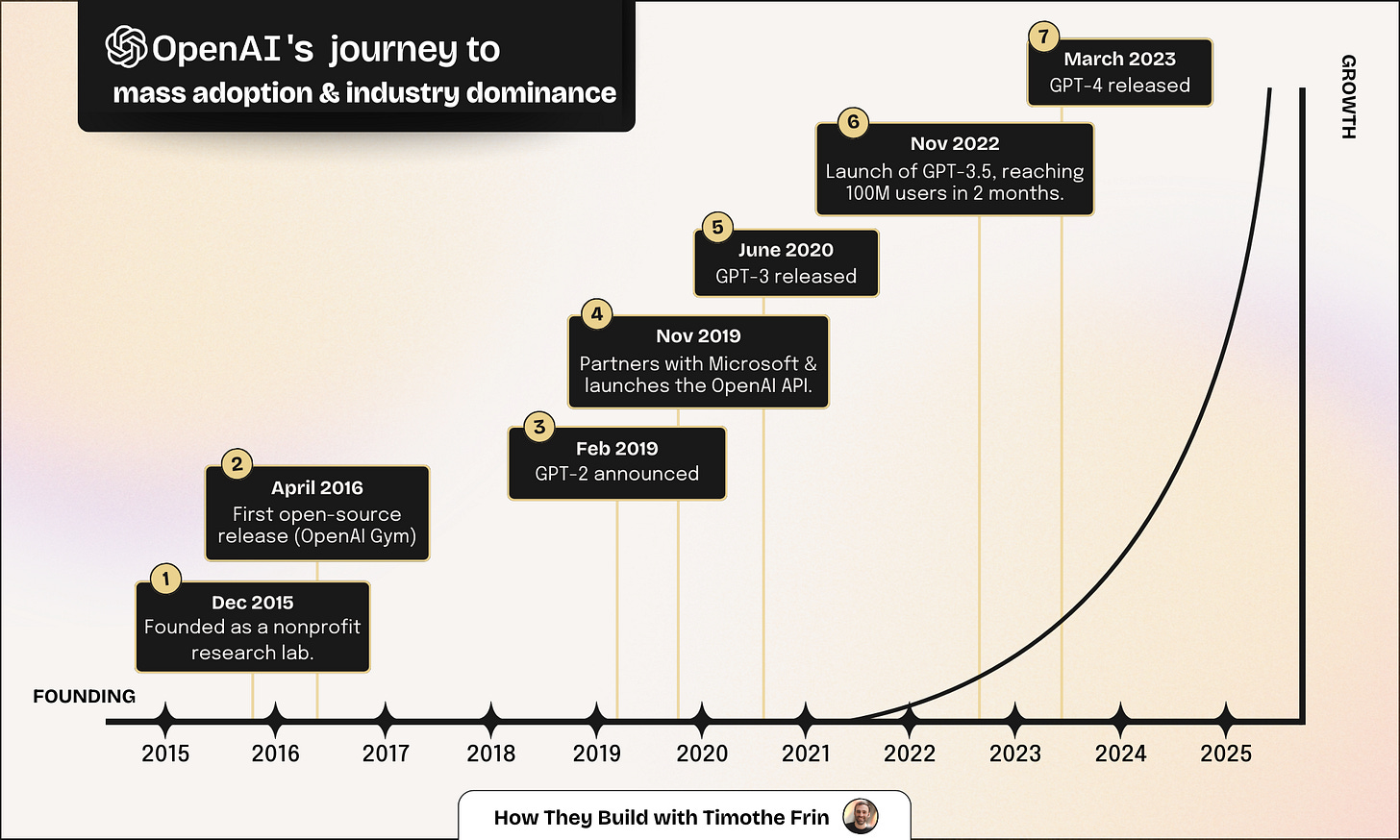

Milestones:

2015: OpenAI is founded as a nonprofit AI research lab.

2019: Establishes a multiyear partnership with Microsoft and begins commercializing models (early API work, leading to the OpenAI API launch in 2020).

2020–2021: Launches the OpenAI API and Codex, enabling developers to build on GPT-3 and to power tools like GitHub Copilot.

2022: Launch of ChatGPT, which rapidly scales to over 100M users.

2023: Release of GPT-4 and expansion of ChatGPT into paid and enterprise offerings, making advanced models broadly accessible to consumers and businesses.

2024–2025: Rollout of GPT-5 family models and agentic tools such as AgentKit, Atlas, and the Responses API, plus a richer ChatGPT for developers experience.

2025: Release of Sora 2, a next-generation video model, and GPT-5.1-Codex-Max, an advanced agentic coding assistant.

I sat down with Romain Huet, Head of Developer Experience at OpenAI, during OpenAI’s DevDay, an event I had the chance to attend after being invited by OpenAI in San Francisco last October — thanks to Maud Camus for the introduction 🫶🏽.

DevDay is the company’s annual gathering where thousands of developers discover OpenAI’s newest models, tools, and product breakthroughs. We recorded this episode right in the middle of the action, in a buzzing venue full of builders, so you may hear a bit of background noise. Thanks for your patience, the insights Romain shared were absolutely worth it.

Disclaimer: The organizational choices and technical solutions shared in this newsletter aren’t meant to be copied and pasted as-is. Always keep your company’s context in mind before adopting something that works elsewhere! 😊About Romain

Romain leads the team that connects OpenAI’s research and product functions with millions of developers using its API and SDKs. His group manages the entire developer portfolio, from the Apps SDK for ChatGPT to AgentKit and Codex, OpenAI’s agentic coding model.

The goal: ensure that every developer, from solo builders to Fortune 500 teams, can extend OpenAI’s capabilities into real applications.

“Developer experience for me is about how we bridge this gap between our research and product teams and the developer community at large” said Romain Huet.

This bridge is critical because OpenAI’s products often emerge directly from research breakthroughs. For example, Codex evolved from GPT-5 fine-tuning work, transforming from static code generation into an autonomous coding agent that runs, checks, and iterates on its own work.

Building at the speed of research

OpenAI’s product culture revolves around one principle: ship fast, learn faster.

The Codex team has been releasing updates “almost every day,” reflecting a company-wide bias toward experimentation and iteration.

Romain notes that OpenAI’s pace of innovation requires constant recalibration: models evolve monthly, so teams must rethink product assumptions continuously. What makes this work is the tight coupling between research and applied product teams, each learning from the other.

Unlike traditional software companies that iterate on fixed infrastructure, OpenAI’s foundation changes with every new model. Romain compared it to his time at Stripe:

“At Stripe, we built elegant layers over a stable foundation. At OpenAI, the foundation itself keeps shifting.”

That dynamic makes adaptability and communication more valuable than long-term certainty. OpenAI’s teams plan in short loops, treating each model release as both an R&D milestone and a product opportunity.

From feedback to roadmap: turning signals into strategy

With millions of developers using its tools, OpenAI relies heavily on community feedback. Romain emphasized that the challenge isn’t getting input, it’s filtering it.

“We have feedback every hour or every minute. The challenge is not getting it, but filtering through the noise” said Romain.

OpenAI collects signals through X, Discord, GitHub, and in-product telemetry. Product teams then synthesize this into roadmap decisions. For instance, early users of Codex resisted the idea of a fully cloud-based coding agent. Developers wanted local control, so OpenAI launched Codex CLI and IDE integrations in response.

The company’s feedback system acts like a fast-moving funnel: qualitative input becomes rapid product iteration. Every release, from APIs to SDKs, is a result of community-led evolution.

The research-product symbiosis

One of OpenAI’s structural advantages is its tight collaboration between research and product. Unlike many tech companies where research is siloed, OpenAI’s product and research teams share goals, feedback loops, and even staff.

When GPT-5 Codex was built, it wasn’t just research fine-tuning a model, it was research and product co-designing a new developer interface for AI agents. The result was a model capable of running its own code, checking results, and reasoning iteratively—built jointly by both divisions.

“We could have stopped at GPT-5, but by having research and product work together, we created GPT-5 Codex, a model better suited for agentic coding” explained Romain.

This close loop extends to customers. Companies running private evaluations in finance, law, and healthcare provide data that flows back to both product and research, creating a virtuous cycle of learning.

Inside OpenAI’s product process

Every OpenAI product shares three common traits:

High-velocity iteration → Teams ship constantly and treat releases as experiments.

Full-stack ownership → Product managers, engineers, and researchers collaborate on end-to-end outcomes.

Model-driven design → The capabilities of new models define what becomes possible.

Romain shared that OpenAI evaluates success through usefulness and adoption, not vanity metrics. For instance, when early GPTs inside ChatGPT saw high enterprise usage but low developer satisfaction, the team rethought the approach—resulting in the new Apps SDK, a better way for developers to create interactive AI experiences.

Every iteration aims to translate new model capabilities into real user value. That’s why launches like AgentKit, Responses API, and Codex SDK feel tightly aligned with model progress rather than arbitrary product calendars.

How AI reshapes product and engineering teams

AI is changing not just the products OpenAI ships but how teams work.

Inside OpenAI, engineers now “manage” agents as part of their workflow. Product managers prototype directly instead of just writing PRDs.

“PMs can build prototypes now. You should actually have a prototype instead of a PRD. Everyone’s getting more technical with better tools” Romain explained.

He believes every role is becoming AI-native: engineers become orchestrators of agentic systems; PMs become creative builders; and companies move faster as repetitive work gets automated.

This internal evolution at OpenAI previews what future organizations might look like: smaller, more technical, and amplified by AI copilots.

[Visual: Illustration comparing traditional vs. AI-native product teams.]

Error: when innovation outpaces users

OpenAI’s greatest challenge isn’t technological. It’s timing.

When Codex first launched, it lived only in the cloud. The team believed this was the future of software creation, but developers weren’t ready. They wanted local, integrated tools.

“We went a little too far ahead in the future all at once” Romain admitted.

The lesson: even world-class innovation must meet users where they are. OpenAI now releases features faster but grounds decisions in developer experience and adoption signals. Each misstep becomes a calibration point for the next cycle.

OpenAI builds products on shifting foundations, making adaptability the core competency.

Developer feedback isn’t just input — it’s a structural loop that defines OpenAI’s roadmap.

Product and research collaboration enables breakthroughs like GPT-5 Codex, merging innovation and usability.

The company prioritizes usefulness over perfection — fast shipping with iterative learning.

Internal teams model the AI future: PMs prototype, engineers orchestrate agents.

Innovation speed creates risk; successful timing comes from deep community listening.

AI-native organizations will resemble OpenAI: small, technical, and continuously augmented by agents.

My full interview with Romain Huet, Head of Developer Experience at OpenAI

Dive deeper into this topic with Romain Huet, Head of Developer Experience at OpenAI, in my latest podcast episode:

Enjoyed this newsletter? Share it with your network using the button below—your support means a lot!

Fantastic deep dive into OpenAI's product engine. The "shifting foundation" comparison to Stripe is spot-on, most companies optimize around stable infrastructure but OpenAI has to rebuild product assumptions every time a new model drops. The bit about Codex being too cloud-first initially is a great reminder that even cutting-edge innovation needs to meet developers where they are. Been experimenting with AgentKit and the velocity of iteration is wild, feels like the roadmap changes weekly which is both exciitng and chaotic.